Nicky Case’s AI Safety is a Must-Read

AI Safety for Fleshy Humans is a must-read. Should you be worried about AI? Is it (or will it be in the near future) dangerous, toxic, racist, sexist, harmful, out-of-control? Will it kill everyone? How can we prevent this?

This topic has various names: AI-Risk, AI-Safety, AI-Alignment, AI-Ethics, and p(doom). Nicky Case gives a great overview of the problems, the approaches that don’t work (and, at some point in the next couple of months Part 3 of the website will have the possible approaches that work.)

(Nicky Case is the same person who created The Evolution of Trust simulation—another site that I believe everyone must go through.)

ChatGPT Hallucinations will get you fired

Another high-profile case of a person getting fired for mistakes ChatGPT made. The new Francis Ford Coppola movie, Megalopolis, got mixed reviews from critics. To prove that critics are idiots, a marketing consultant suggested that the trailer for Megalopolis show how, in the past, critics gave bad reviews of Coppola’s earlier movies, like Godfather, which are considered all-time greats. The only problem is that he used ChatGPT to find these old negative reviews, and ChatGPT promptly hallucinated fake reviews. (What’s a ChatGPT hallucination?) “For example, the trailer claimed that Pauline Kael wrote in the New Yorker that “The Godfather” was “diminished by its artsiness.” Kael in fact loved the movie.”

The trailer has been withdrawn, abject apologies have been tendered, and the marketing consultant no longer works with Lionsgate.

Of course, this is a widespread problem and is likely to affect everyone, including you unless you’re careful.

Link.

What’s in Claude’s System Prompt?

Nice analysis of Claude 3.5 Sonnet’s System Prompt. (What’s a system prompt?) Check it out to see how Claude handles sensitive topics, how it refuses to identify any faces in images it is shown (“face-blindness”), and how it is trying to be less annoying (“Claude avoids starting responses with the word “Certainly” in any way”). Reading the entire system prompt is also instructive.

Link.

Why managers will be better at using LLMs than programmers

Programmers make a lot of money because they’re great at giving precise instructions (“programs”) to computers that are good at following precise instructions and getting reliable results. LLMs don’t need precise instructions. And they are unreliable. Both of these things are major problems for programmers. But this is the life of managers: getting reliable results out of unreliable juniors.

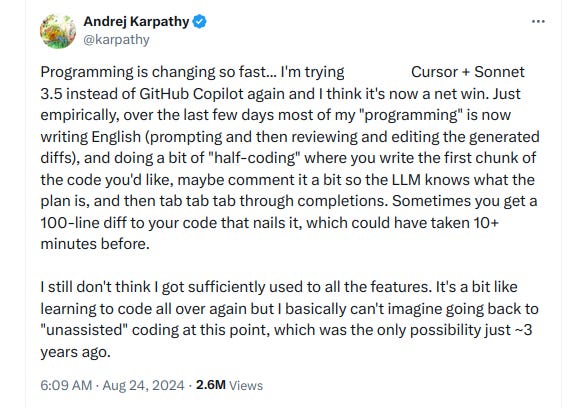

Karpathy recommends Cursor.ai for programming instead of VS Code + Copilot

If you are a programmer and haven’t yet tried a GenAI-enabled IDE you should. Here’s a more detailed video about Cursor.ai.

Learning by Teaching ChatBots

This professor of mathematics incorporated Gen AI in his course in a different (and exciting) way. He made the students teach Gen AI chatbots how to solve the problems and exams for that class (by creating appropriate prompts, evaluation rubrics, and problem-sets on which to evaluate the chatbots):

Why is this so cool? For one, it is a well known fact that the best way to learn is by teaching. In this course, the students learned that creating rubrics (the rules by which to grade the output of the chatbot’s attempts at solving problems) and creating the problem sets for the chatbot to solve requires you to understand the material much more deeply than simply “learning” it and solving the problems yourself.

Write a lot: as training data for your AI-twin

AI-blogger Gwern says that this is an excellent time to write a lot of blogs and social media posts and in general create a lot of content. Because soon it will be possible to have LLMs digest all our creative output and then create an AI digital twin of you, and maybe that’s a good thing that allows a bunch of interesting possibilities?

Temporarily Giving Up On Perplexity

For a few months, I was using Perplexity regularly, as a source of information somewhere between Google Search and ChatGPT. The problem with ChatGPT was that it wasn’t great at factual and up-to-date information: it would hallucinate. The problem with Google search is that it doesn’t really understand your question and instead of an answer it just gives a bunch of links matching the keywords in your question. Perplexity struck the right balance in the middle: it uses LLMs to understand the question, uses a search engine to fetch the relevant links, and then uses LLMs to summarize the results into a coherent answer.

Except that, in the past few weeks, I find ChatGPT doing the same thing better than Perplexity. Its ability to browse the web and summarize the results has improved. So, temporarily, I’ve stopped using Perplexity. But who knows, that might again change in a month.

LLMs are now helping people flirt on dating apps

Apparently, Tinder, Bumble and other dating apps are building Gen AI-based virtual wingmen. The LLM assistants in their apps will help their customers with “in crafting compelling chat-up lines, building attractive profiles, and even providing feedback on flirting techniques” according to recent reports.

Using ChatGPT App as a Customized Voice Recognition Tool

If you don’t like typing, you can use your mobile’s speech-to-text capabilities for voice typing. iPhone has a built-in one. Google’s keyboard has one in Android. WhatsApp gives you one. But I don’t like any of those because they make mistakes, don’t give punctuation, and don’t understand some of my words. But, the ChatGPT app does a far better job of understanding the exact sentence by intelligently understanding the context. Also, it can be customize for words and phrases that are unique to your situation. See, for example, this tweet:

Good one!