ChatGPT's Great New (Paid) Features: o1 and Advanced Mode

Once again, ChatGPT's paid subscription is justified

Most of last year, I was that anyone who can afford to pay the monthly subscription fees for ChatGPT Plus ($20 + GST) should take it. Then, from May to August, I stopped saying that, because ChatGPT’s free service, with ChatGPT-4o was good enough for most purposes. But now, there are two new models, ChatGPT-o1-preview and ChatGPT-Advanced-Voice-Mode that are only available to paid subscribers, and once again, it makes sense to get a paid subscription.

The rest of this post is a quick overview of what these two things are and why they’re great.

O1

A few weeks back, OpenAI released the latest series of models that are specifically trained to do “complex reasoning”. These are called o1 (which most of us don’t have access to), o1-preview (which is what I’ll be talking about in this article most of the time) and o1-mini (a cheaper, faster variations). These models are able to do a bunch of things which used to cause problems for ChatGPT-4, ChatGPT-4o, Claude 3.5 Sonnet, and Gemini Pro (the best models in the market before o1 came along).

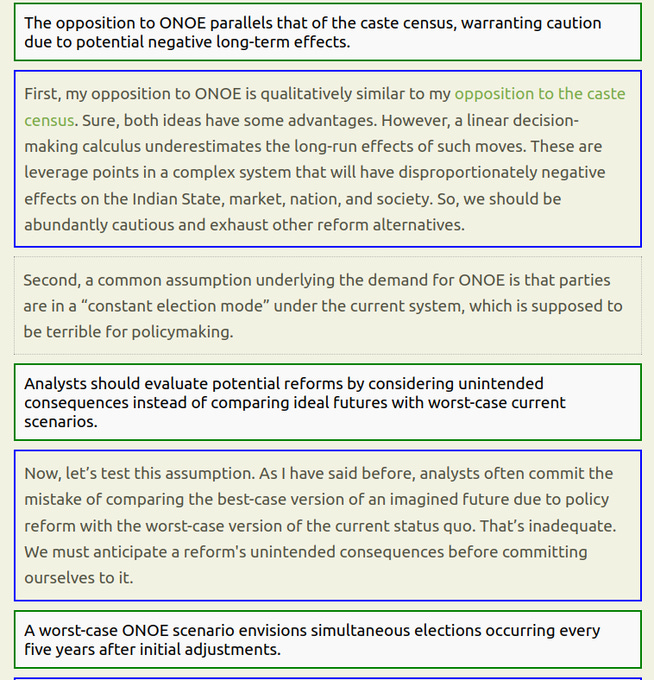

A few days back, in one hour, I was able to use o1-preview to write an extension for my web browser that I can use to produce one-line summaries of every paragraph on any webpage: that way, I can read the summary and decide whether to read the full paragraph in detail or to skip to the next one. This is very impressive because 1) I have no idea how to write a browser extension, 2) I am not good at JavaScript programming, and 3) This is something that I’ve wanted to do for a long time, but every time I tried doing it with the older models, they all failed. In fact, even this time, I tried to use Claude 3.5 Sonnet and ChatGPT 4o to write the extension and they failed but o1-preview succeeded.

ChatGPT 3.5 was as good as an average high-school student. ChatGPT 4 and 4o are probably as good as average undergraduate students. ChatGPT o1-preview is as good as a PhD student. A “mediocre but not completely incompetent, grad student” is how Terrence Tao, one of the greatest living mathematicians, has described it.

This is what Terrence Tao says about it:

Here are some concrete experiments (with a prototype version of the model that I was granted access to). [Here] I repeated an experiment from [here] in which I asked GPT to answer a vaguely worded mathematical query which could be solved by identifying a suitable theorem (Cramer's theorem) from the literature. Previously, GPT was able to mention some relevant concepts but the details were hallucinated nonsense. This time around, Cramer's theorem was identified and a perfectly satisfactory answer was given.

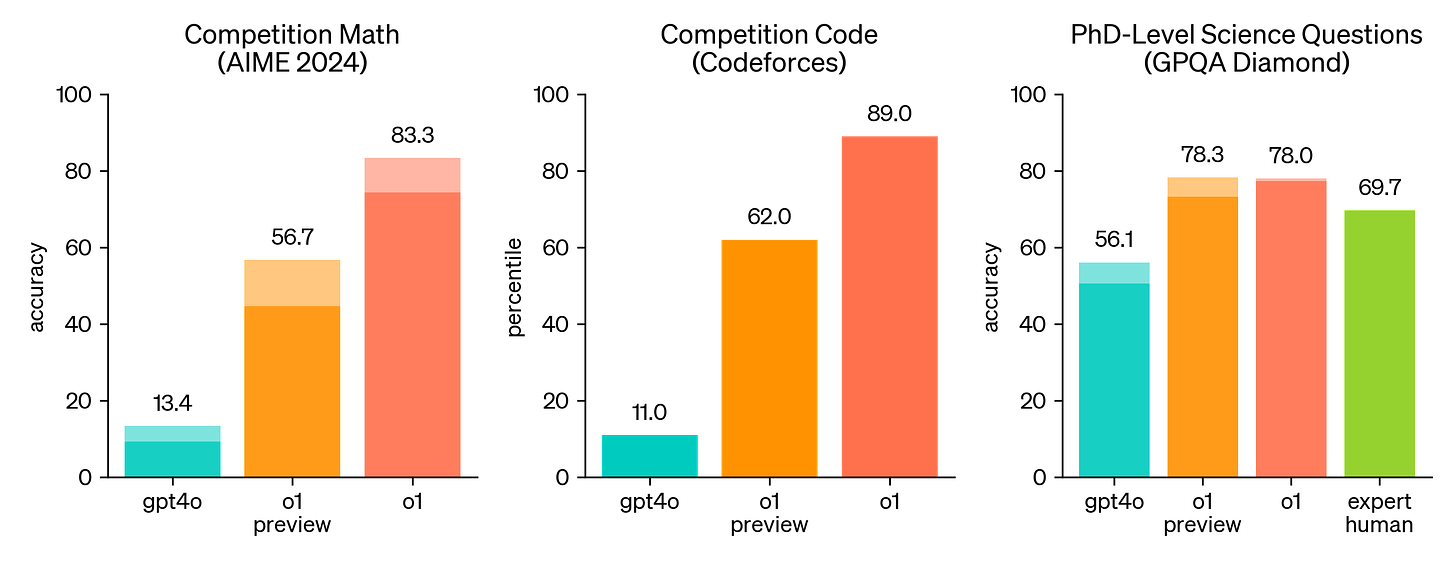

This is the improvement in competitive maths, competitive programming, and PhD level science from 4o to o1-preview to o1:

OpenAI o1 ranks in the 89th percentile on competitive programming questions (Codeforces), places among the top 500 students in the US in a qualifier for the USA Math Olympiad (AIME), and exceeds human PhD-level accuracy on a benchmark of physics, biology, and chemistry problems (GPQA).

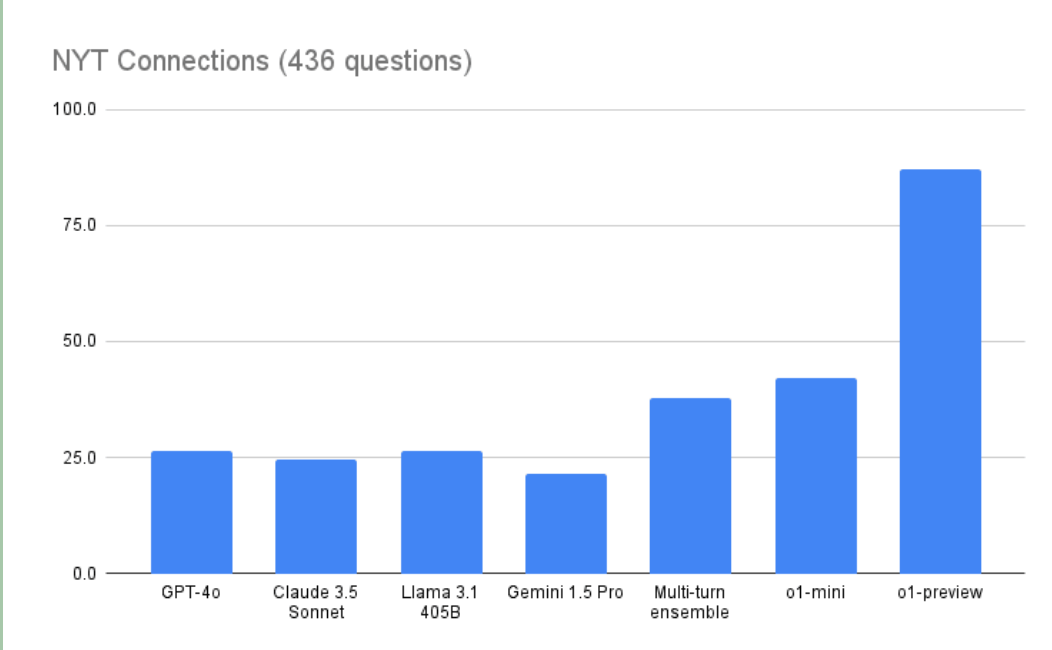

Ethan Mollick was able to get it to solve cryptic crosswords, which are quite difficult for earlier LLMs. It even solves New York Times’ Connections problems.

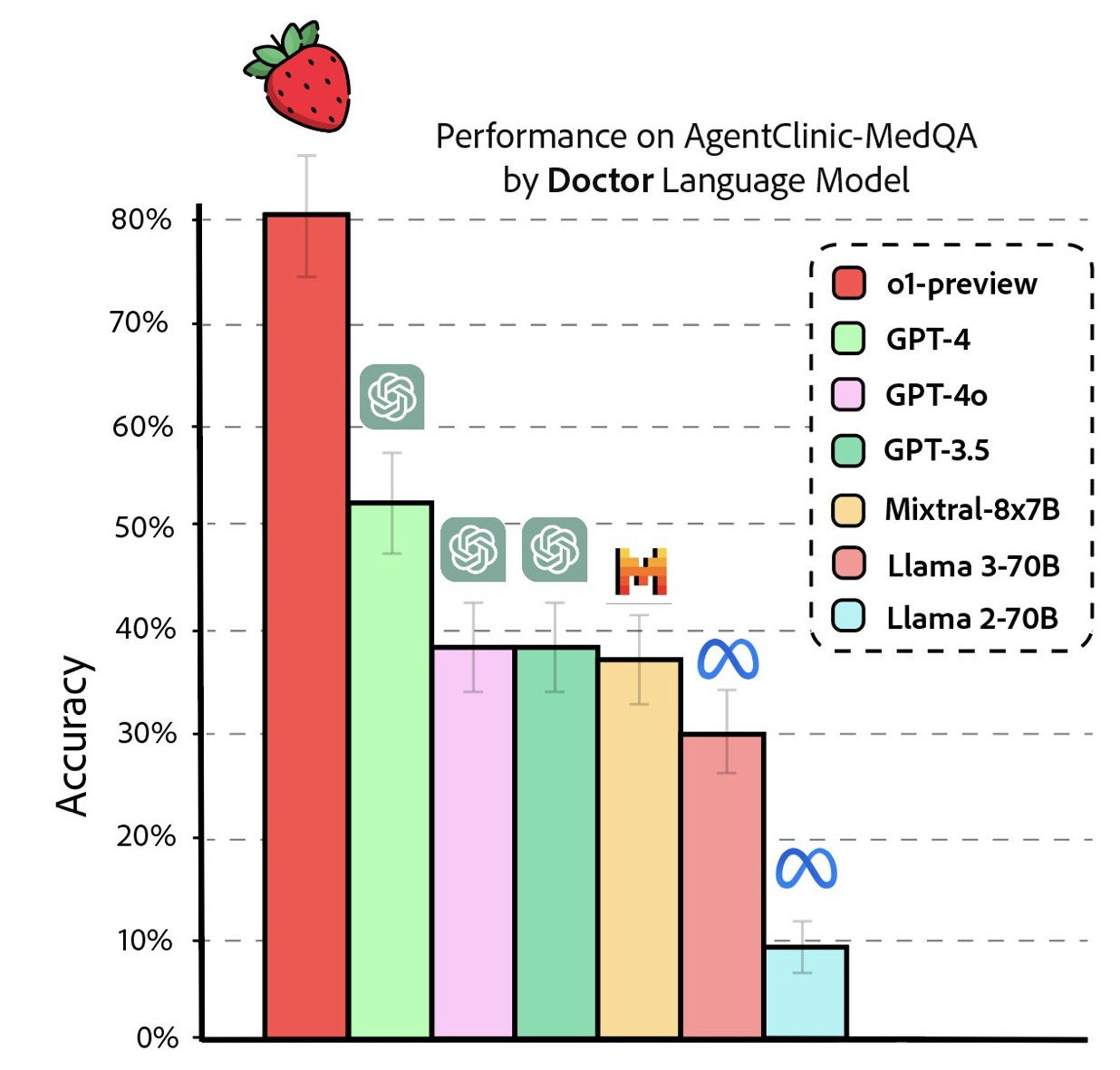

Here’s what it does with medical questions.

Derya Unutmaz claims:

This is the final warning for those considering careers as physicians: AI is becoming so advanced that the demand for human doctors will significantly decrease, especially in roles involving standard diagnostics and routine treatments, which will be increasingly replaced by AI.

This is underscored by the massive performance leap of OpenAI’s o-1 model, also known as the “Strawberry” model, which was released as a preview yesterday. The model performs exceptionally well on a specialized medical dataset (AgentClinic-MedQA), greatly outperforming GPT-4o. The rapid advancements in AI’s ability to process complex medical information, deliver accurate diagnoses, provide medical advice, and recommend treatments will only accelerate.

Keep in mind that o1 is not better at everything. Ethan Mollick points out:

To be clear, o1-preview doesn’t do everything better. It is not a better writer than GPT-4o, for example. But for tasks that require planning, the changes are quite large.

But the short summary is: if you want to do complicated things involving planning of complex reasoning, o1-preview is great.

Advanced Voice Mode

In general, “voice mode”, the ability to talk to ChatGPT is very powerful. Having a conversation with ChatGPT is very different from having to type prompts and reading an answer. And now there is a new “advanced voice mode”, available only on the mobile app and only to paid subscribers, which makes the conversation much more natural: It responds immediately (instead of the 10-second lag that the default voice mode has), it allows you to interrupt its responses midsentence, and it can sense and interpret your emotions from your tone of voice and adjust its responses accordingly. As a result, the conversation flows more smoothly, and when you use it, you’ll realize that you have a very different kind of interaction with the LLM.

There are a number of interesting things advanced voice mode can do. For example, it can do real-time translations. So, an English speaker and a Hindi speaker can have ChatGPT listening to their conversation and translating all the English speech to Hindi and all the Hindi speech to English. I tried it out just now and it works like a charm.

It is great for learning things when you’re busy doing something else (like exercise, or driving, or cooking). This morning, while exercising, instead of listening to a podcast, I spent an hour chatting with Advanced Voice Mode. For example, I asked it to explain to me what is a coronary calcium scan and then asked a bunch of follow up questions like why is it getting popular as a way to check your risk for heart problems, how it works, and what its advantages and disadvantages are compared to other methods, and the possibilities of doctors suggesting unnecessary interventions (angioplasties) after tests like these. I am not well informed about this topic, and it answered all the questions patiently. It was like talking to a friendly doctor who was on the phone with me. I could switch from English to Hindi to Marathi and all worked seamlessly. (Its Hindi and Marathi vocabulary is a bit unnatural and formal, but otherwise it works well.)

Search the internet for more ways in which people are using it. For example, here are a bunch of examples: including helping tune a guitar, telling a story with dramatic and distinct voices for each character, using Advanced Voice to improve your sales pitch, and more.

Of course there are other obvious advantages: kids who can’t read or write can interact with it, older people, illiterate people, non-English speakers can all benefit from advanced voice mode. You can use it as a language tutor and I’m sure it will work far better than using Duolingo, or even doing a formal language course. And of course, it works very well as a transcription service to listen to a conversation and convert it to text (I haven’t yet tried it with more than two people talking, and I assume it won’t work as well).

There are a number of ways in which it is flaky, but surely that will improve over time. And overall, it is a big new capability, and the more we use it the more interesting uses we will find for this capability. And at some point in the near future, they will release the full version which can do audio and video natively and that will be even cooler: