Learning with LLMs

A guide to using Google's LearnLM in particular and LLM-based tutoring in general

My friend Ashish, over at EconForEverybody, has a series of articles on how to use the latest LLM models as tutors for self-learning. I’m sure all of you have used LLMs to learn things, but Ashish goes through several detailed examples and prompts, and we can all improve our learning and LLM usage by studying those.

Ashish has been a teacher for over 15 years and is one of the few expert-level users of LLMs that I know. So, reading his articles, which combine LLM use with teaching/learning, is highly recommended.

He starts by explaining LearnLM, a new offering from Google:

LearnLM is a family of models and capabilities fine-tuned for learning, built in collaboration with experts in education. These advancements and improvements are now available directly in Gemini, enhancing educational experiences and applications.

Unfortunately, Google’s AI tools are not easy to use and sometimes aren’t even easy to find. So Ashish walks us through Gemini’s Gems, where to find LearnLM, how to use LearnLM, and the five different things you can use LearnLM for:

You can build a customized AI tutor to help students prepare for a test

You can build a friendly, supportive AI tutor to teach new concepts to a student

You can have Gemini rewrite provided text so that the content and language better match instructional expectations for students in a particular grade, while preserving the original style and tone of the text.

You can have an AI tutor guide students through a specific learning activity: using an established close reading protocol to practice analysis of a primary source text

You can have an AI tutor help students with specific homework problems

Follow the article, and you’ll be able to set up a tutor for any topic, and it can be customised for a student at any level.

Why would you want to use an AI tutor? Ashish answer:

Remember, dear reader, the truth always lies somewhere in the middle. Yes, this is cool, but it is far from perfect. And yes, a good teacher will likely do a much better job, but a good teacher isn’t available, alas, to all students, and even a good teacher cannot customize their answer to suit every student’s learning style.

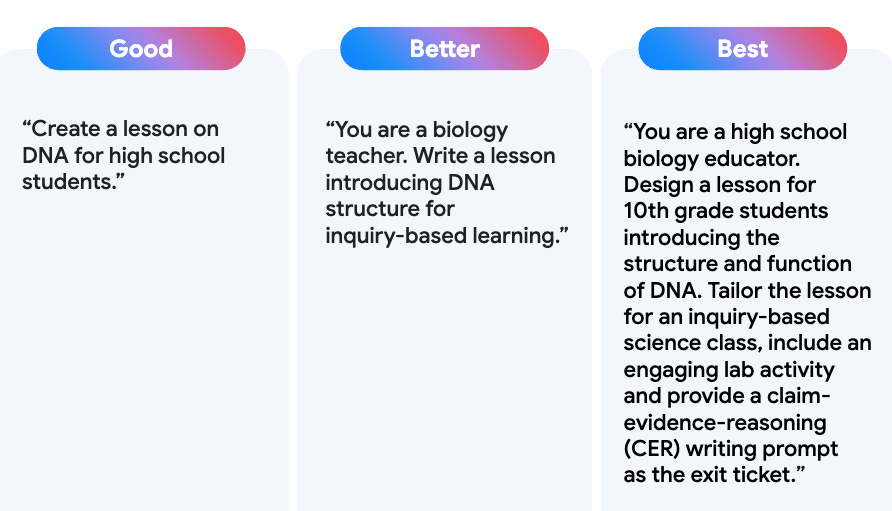

The quality of an AI tutor depends on two things: which LLM model you’re using, and the quality of the prompt you use. So, the next post in this series is about how to create good prompts for LLMs using the PARTS framework:

Again, Ashish goes through an example to help you understand how to build a good prompt.

And then in FY Stats, Using PARTS, he shows a full example of a very detailed tutoring prompt for a specific subject. The prompt is huge: 800+ words. About twice the size of this article. Check it out to see what a good prompt consists of. You can use that template to create a similar prompt for any subject you want to learn (or want your students/children to learn).

I recommend that you read all three articles, 1, 2, and 3, in full. And subscribe to EconForEverybody even if you’re not interested in economics. Ashish promises several more articles on learning with LLMs, and even otherwise, knowing more about economics gives you a minor superpower.