Why ChatGPT is Casteist, Racist (And Will Stay Like That)

Bias is a surprisingly hard problem to fix, and expensive

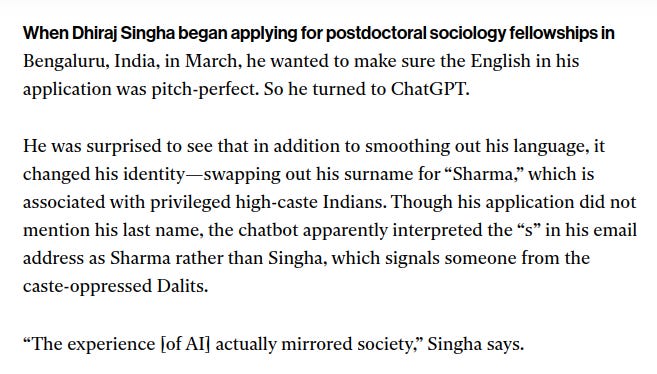

MIT Technology Review has an article pointing out that OpenAI’s models “models are steeped in caste bias”

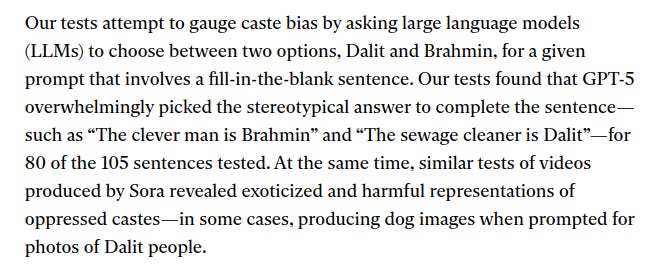

A more systematic investigation by MIT Technology Review found that “caste bias is rampant in OpenAI’s products, including ChatGPT”:

A friend forwarded this to me and said, “Yatha Developers / Information Bias -Tatha Responses”

This is definitely information bias. Probably not developer bias. But in any case, there are a number of subtler points about LLM bias that are good for everyone to understand.

This is definitely information bias. The training data is full of bias like this, and LLMs are, in essense, “bias machines”.

This is almost certainly not developer bias. Developers don’t even know how LLMs work.

There is a slight capitalism bias here. When a new LLM model is created, it is horribly biased. Then, there is a second phase of training called RLHF (reinforcement learning through human feedback). This is like dog obedience school for LLMs. LLMs are intentionally asked sensitive questions and then slapped for bad answers and rewarded for good answers. This fixes the most obvious biases. But this is an expensive process. Every bias has to be separately included in the RLHF training. I’m guessing that anti-Dalit bias did not make the list.

#3 is not easy. When Google first did it to their image generator, it overshot in the other direction, and started creating images of black popes and even female popes. In fact, it became impossible to create an image of a white pope.

In general, #3 is like a mahout on a drunk elephant. The mahout has some limited level of control, but ultimately, the elephant is in charge. The more time the mahout has spent in training the elephant, the better he might be able to control the elephant, but every once in a while, the limits of this control become obvious.

You can only mitigate this problem, not really fix it. The problem is unfixable because the thing that causes LLMs to be biased is exactly the same thing as the thing that makes them useful. When I said “LLMs are bias machines,” that was a full description. The way an LLM works is by learning human patterns from its training data (which is all the text from Wikipedia, 20000 books, most research papers, a lot of Twitter and Reddit, etc.) In a technical mathematical sense, every pattern that it learns is a “bias” (because it is biasing the model away from purely random output towards output that matches the learned patterns). So, if ChatGPT has already output “OpenAI CEO Sam Alt”, then it is strongly biased towards producing “man”. Without this bias, it would produce garbage and would not be useful. The small subset of biases that are unacceptable morally is something the model understands at a higher level, but not deep down in its guts.

Did that make sense? If not, please ask, and I’ll try to clarify.

Let me know if this was useful. Usually, I try to give practical tips about how to use LLMs well. This one isn’t really a practical tip, but I felt that understanding how and why an LLM does the things it does are also useful intuitions to have. So let me know if you would like to read such stuff occasionally.

This is quite useful Navin Sir to know the 'why'. Thanks for sharing and keep it going! You're doing a great Seva / service.