Why Did I Post a Question On Twitter Instead of Asking ChatGPT?

Should all your questions be asked to LLMs? Should you never ask humans now that we have ChatGPT?

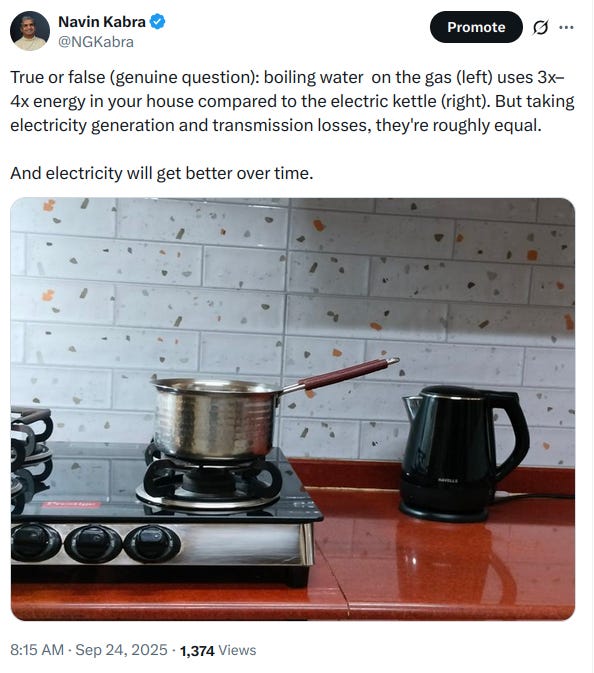

A few weeks ago, I asked this question on Twitter:

The actual question and its answer aren’t important as far as this article is concerned. The important part is what a follower asked me in response:

My answer was:

But there are a number of important points related to this exchange, and in the age of AI, I think it is really important to have a clear understanding of all these issues.

I think the following statements are all true:

Any time you have a question like this, it is probably a good idea to ask a good “Thinking” AI for its opinion. It almost always will give you some interesting information.

You should ask ChatGPT 5 Thinking, or Claude 4.5 Sonnet, or Claude 4.1 Opus, or Gemini 2.5 Pro (soon to be Gemini 3.0 Pro, I think). Do not ask ChatGPT 5 Auto or ChatGPT 5 Fast, or ChatGPT 5 Thinking Mini. Do not ask Gemini 2.5 Flash. You can ask Grok, but be ver,y very wary of its answer—it hallucinates more than the others. Definitely do not ask Meta.AI.

Do not treat the LLM’s answer as the truth. It can hallucinate, it can be wrong, or it can simply be regurgitating the consensus of some people on the internet who are wrong. You need to ask it for sources of information, click on those links, and form a judgement on how reliable those sources of information were (and whether they actually said what the LLM claimed they said—sometimes the LLM just misrepresents the source)

I regularly see people on the internet treat LLM outputs as if it is the gospel truth. To prove some point they’re trying to make, they’ll just paste a screenshot of a ChatGPT answer and behave as if that is the end of the matter. Or they ask, “@Grok is that true?” and completely trust Grok’s answer. We need to fight this tendency.

When the topic is politically sensitive (as it happens in this case, because the environment and climate change are involved), you definitely should not trust LLMs. You should ask people. (Which is why I asked on Twitter.)

Of course, people are even less trustworthy. But, by now, you should have clear “priors” on which of your friends and social media connections are trustworthy (on this particular topic). If you’re not sure of how this process works, or you don’t know what the word “prior” means in the previous sentence, check out my video on Bayesian Thinking.

If I Have To Verify Every Answer, Why Bother With ChatGPT?

One question that arises is that if anyway I need to ask people after asking LLM, why even bother asking ChatGPT?

There are several reasons:

In some cases, the question isn’t important or sensitive enough that you need 100% accuracy. LLMs (especially the good ones that I listed earlier) are right most of the time, and for many casual, curiosity-led questions, those answers are good enough

In some cases, you can click through to the sources, and those are enough to convince you that the answer was correct

In some cases, the LLM gives you both sides of the argument, and that is all you need. Getting a rough idea of the two sides is more important than knowing which side is right. Often, the truth is in the middle anyway.

In some cases, you do have to ask people, but the LLM gives you enough background information that you can ask a much more intelligent and precise question. And/or you can ask them better follow-up questions after they’ve answered. Because, without the LLM conversation, your question might end up being too vague, or ambiguous, and then the conversation with the humans takes longer than it should, and/or you end up talking past each other.

The question is vague enough that I abandoned thinking about it after 5 minutes. Kettle would be better on most metrics, I'd say.